Welcome To Thoughtnet: How AI-Powered Bots Are Reshaping Cyberwar

Cybersecurity teams must now contend with the Thoughtnet–an emerging threat that blends coordinated bot networks, AI-generated content, and targeted cyber attacks to undermine security and trust online.

Image: Dall-E 3

Cybersecurity professionals know malicious hackers’ tactics, techniques, and procedures. The cyber threat landscape constantly evolves, from phishing emails to botnets to ransomware, as attackers find new ways to breach networks and compromise systems. But there’s an emerging hybrid threat that cybersecurity teams must be aware of: the Thoughtnet.

The Thoughtnet is an emerging threat that synthesizes elements of traditional cybersecurity attacks with narrative attacks and automated narrative attack campaigns. It’s a complex network of social media bots and sock puppet accounts deeply embedded in online communities associated with specific target audiences. The controlling entities behind these accounts activate them in coordination to monitor the conversation and identify opportune moments to inject chosen narratives or content, creating the illusion of organic grassroots support. By exploiting these groups’ trust and social dynamics, Thoughtnets can ‘launder’ false or misleading narratives, manipulating authentic community members into spreading them far and wide. This blending of automation and psychological manipulation makes the Thoughtnet a potent tool for shaping beliefs and behavior, but it is also a highly complex challenge for defenders.

Thoughtnet plays a central role in most modern large-scale narrative attack campaigns. The Thoughtnet is a complex network of advanced bots that:

- Have digital personas aligning with a target audience,

- Are embedded into the network of the target audience,

- Are directed by a central controlling entity and

- Rapidly cluster around posts expressing a given narrative.

LEARN MORE: TAG Report by Ed Amoroso: How Narrative Attacks Represent a New Threat Vector

Cyber threats and narrative attacks have some similarities. One is deception—both rely heavily on tricking targets into believing false information or trusting a malicious source. Advances in AI are supercharging this, enabling the generation of compelling fake audio, video, and text content.

The other core pillar is automation. Cyber attackers have long used botnets of controlled computers to amplify their efforts. Narrative attacks increasingly leverage similar networks of automated “sock puppet” accounts to spread misleading narratives online rapidly.

To illustrate these similarities, let’s examine the overlap between phishing campaigns and narrative attack operations. Both rely on emulating a trusted source to lower the target’s guard. Phishing could mean spoofing an email to make it look like it’s from the CEO or HR department. Narrative attacks often involve creating fake social media personas masquerading as real people or organizations.

The goals are similar, too: exploiting human interests and biases to influence behavior, often by micro-targeting specific demographics. For a phishing campaign, that could mean sending fake invoices to accounting personnel. Narrative attacks could mean pushing divisive organizational or executive team messaging to specific hyper-aggressive individuals or groups to target and disrupt business operations.

Then there’s the use of botnets to scale the attacks. With phishing, attackers may rent time on an existing botnet or compromise devices to build their own. With narrative attacks, the barriers to entry are even lower – all it takes is making fake accounts. The challenge is in making them convincing and embedding them into target communities.

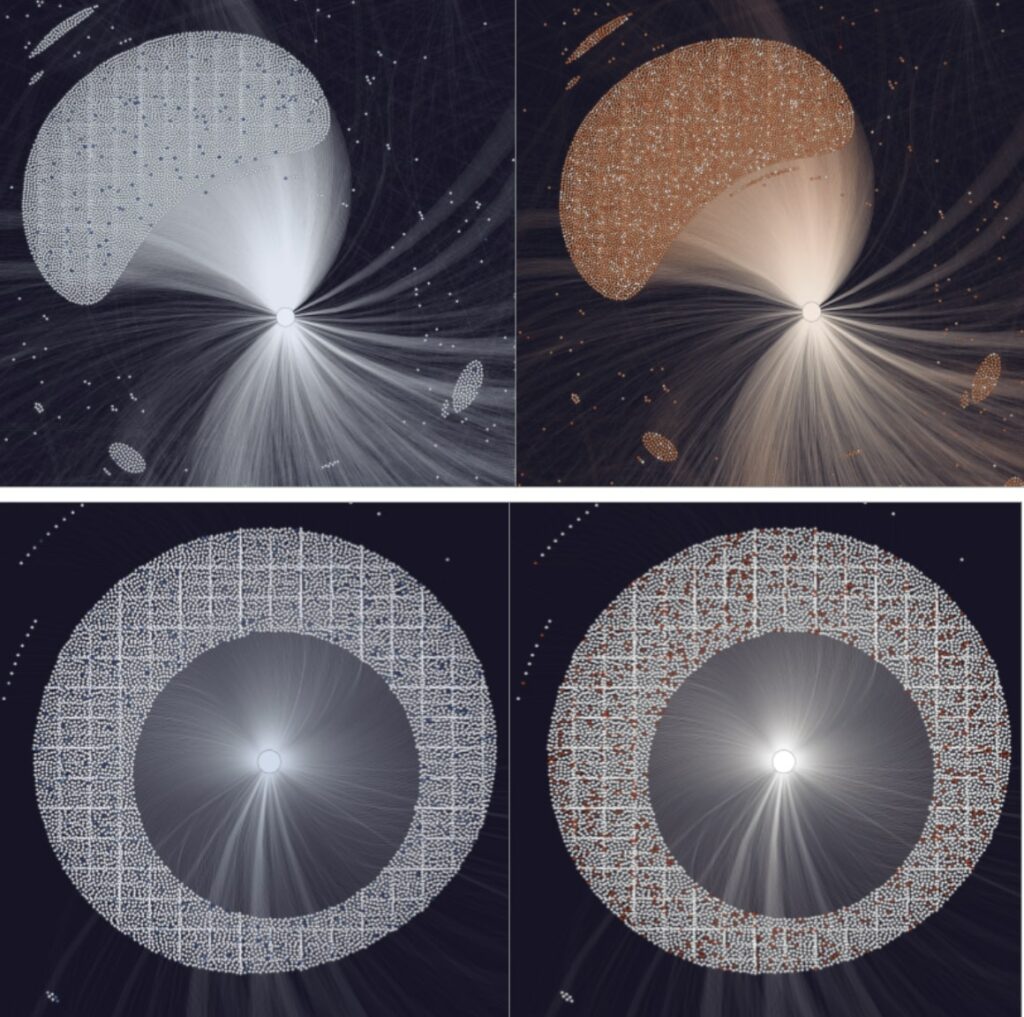

In both cases, botnets allow small efforts to balloon into large-scale threats. Researchers can visualize this dynamic using network graphs. Mapping how bot-powered amplification spreads phishing links or false narratives reveals the power of automation as a force multiplier for social engineering.

This is the new normal for the threat landscape. Defenders must understand how these once-separate categories of cyber threats and narrative attacks combine into potent new risks. Thinking holistically and countering deception and automation across both domains will be vital to staying ahead of the curve.

Good work has been done in recent years to categorize and taxonomize different techniques in the field of narrative attack [See EU DisinfoLab’s FIMI]. Many have argued that adherence to the cornerstone ATT&CK framework set forth by Mitre in 2013 is a suitable course of action by which cybersecurity and security professionals alike can understand narrative attacks. The DISARM framework has recently been employed to marry ATT&CK’s wide-ranging TTP classification with a narrative attack-specific focus. However, classification, detection, and defense lag far behind offensive capabilities in narrative attacks.

Deceit

Threat actors in either practice share a common core strategy to carry out their campaigns: deception. Deceit is a required component in successful phishing campaigns, where the momentary establishment of trust is necessary for the victim to click that link, wire that money, or offer up those credentials. Deception is requisite at the same stage, inspiring the victim to share a provocative post, support an attacker’s cause, and spread manipulative information.

To achieve their intended goal, phishing attacks exploit the target’s trust, emulating a colleague, family member, or friend or spoofing a trusted email address, website, or phone number. In the practice of narrative attacks, this same process is necessary—albeit via different techniques.

At present, online conversation commands a certain level of trust—online and media literacy hasn’t reached the boiling point at which no one trusts anything they see online. This trust is foundational to continued social engagement. Still, it has quickly been eroded in recent years as threat actors realized how to harness highly polarized online communities to spread adverse narratives organically.

This is done via a variety of tactics & techniques, many of them charted in the DISARM framework – co-opting partisan users & influencers, developing or spreading conspiracy theories, assuming a fitting persona in a trusting online community, distorting facts to suit a manufactured reality, and more. These techniques, mainly when used in conjunction, come with a presupposition of authority and rigor. A narrative that suits the target’s worldview and has passed through and been shared in the target’s community at scale is often more than enough to establish trust.

LEARN MORE: 8 Ways for Security Leaders to Protect Their Organizations from Narrative Attacks

Automation

Another core component of either practice is the usage of automation or bots. Botnets are an exceedingly cheap and relatively effective way of performing malicious tasks at scale for the modern cyber threat actor. They can be rented or bought at low cost, and when harnessed correctly, they cause myriad problems, especially for unsophisticated defenders.

With narrative attacks, curating and owning a network of sock puppet accounts is a near-prerequisite in generating inorganic influence online. When executed correctly, they offer access to networks of ideologically driven organic users. When leveraged long-term, these networks will create a ‘Narrative Laundering’ effect—rinsing astroturfed campaigns into organic discourse adopted among online communities. This workflow is compelling with the simultaneous activation of subversive influencers that lend credibility to lesser-known users and channels.

More crude usages of bots involve leveraging an entirely fabricated group of users to promote posts from a particular user or those expressing a certain perspective. These are exceedingly easy to create and can be done at a low-to-zero cost to the threat actor. They can often be spotted through key identifying traits – inordinately high post or following counts, networks of shared or similar usernames, and a flood of posts that ostensibly read as nonsense are standard.

LEARN MORE: How Compass by Blackbird.AI Uses Generative AI to Help Organizations Fight Narrative Attacks

Combined, these two core elements compose what I call a ‘Thoughtnet’ – a highly organized, curated, and automated group of sockpuppet accounts integrated into politically or ideologically charged communities to foment narratives of the attacker’s choosing. Thoughtnets have been operating for some time but are only beginning to attract the public’s attention. The Thoughtnet’s efficacy lies in its scale and degree of integration into online circles.

Categorizing and tracking this threat is critical to understanding sophisticated narrative attacks and maintaining trust and integrity in the online information ecosystem. This technique is most often used by state-aligned or sponsored threat actors seeking to inject harmful narratives toward organizations that cause physical, financial, or reputational harm.

Another core tool in the modern threat actor’s arsenal is the Distributed Denial of Service attack (DDoS). This, too, has a direct sibling in the field of narrative attack – what we at Blackbird.AI call the Distributed Denial of Trust (DDoT). The DDoT is characterized by a flood of malicious content into the online information environment, using techniques such as bot-enabled spamming, hashtag hijacking, and reply farming. This tactic is often employed to degrade and chip away at trust – whether in individuals, corporations, governments, or social fabric generally.

The DDoS is a favored technique of a wide array of threat actors ranging from script kiddies to advanced persistent threat groups for its versatility and low cost. While it is now well defended against, the DDoS attack is commonly used in concert with other simultaneous forms of attack.

Chaos + Distraction = Compromise

A final link between narrative attacks and cyber is well-established in war. Both are practical tools for creating chaos and instability—an ideal operating environment for the attacker at the onset of kinetic warfare. Attempts at sowing narrative attack in tandem with widespread targeted cyberattacks are now standard operating procedures between warring nations. This process is abundantly evident in Russia’s invasion of Ukraine, where vast information operations have worked for years now to sow a select set of narratives offered by Putin at the onset of the war.

Some have argued that information warfare has entirely usurped cyber efforts in Russian offensive operations against Ukraine. This isn’t entirely supported by the current evidence, given that Russia has engaged in extensive cyber attacks against Ukrainian critical infrastructure since the onset of the invasion and the comprehensive role of electronic warfare on the battlefield in Ukraine. However, more evidence supports the idea that information warfare may have taken a more central role in securing an advantage in a drawn-out conflict.

Blackbird.AI has custom-built narrative intelligence models to detect online behavioral and textual support for various political causes. These models establish a link between influence operations and automated online activity. They also include metrics indicating the relative likelihood that a given user is run by automation. When used in tandem, these create an effective method for judging automated narrative attack campaigns and provide early signals.

LEARN MORE: How Narrative Attack Campaigns Magnify Cyberattacks: Insights and Strategies for Defense

Case Study: Pro-Russian Bot Networks

The RAV3N Narrative Intelligence team at Blackbird.AI has closely followed the information environment surrounding Russia and Ukraine since the onset of the invasion on February 24, 2022. Diving into recent data analyzed by the team reveals a common thread – very often, bot-like users are embedded into ideologically driven communities to spread narrative attacks more effectively.

Much like threat intelligence teams can trace the IP addresses of endpoints, narrative attack researchers can attain vague attribution by observing the behavior, networks, and history of sock puppet accounts. While IP addresses don’t tell the entire story and can themselves be spoofed or obfuscated, sometimes identifying an IP address belonging to a CIDR range known to be compromised can begin to provide attribution. Similarly, concrete conclusions are difficult to make when studying sockpuppet bot accounts. Users can be as vague or descriptive as they prefer in providing identifying information on most social media sites, and threat actors tend to fabricate false biographical details regardless.

One of the few tools narrative attack analysts have to make attribution is observing, cataloging, and comparing behavior. Over time, our familiarity with pro-Russian information operations has allowed us to make more informed decisions about possible sources of pro-Russian information operations through specific techniques—the thoughtnet being a central one.

Recent data observing chatter generally focusing on the war in Ukraine showed that users flagged by Blackbird.AI’s cohort system as pro-Russian were 60.7% more likely to be bots than the general pool of users observed. Furthermore, these same users were 18% more likely to engage in coordinated information campaigns, as indicated by our Anomalous metric, which measures coordinated inauthentic activity.

This concept is compatible with effective malware campaigns. Successfully embedding and immersing bot accounts into ideologically driven communities can give a compromised system more privileges. The more privileges an endpoint has, the better the attacker’s chances of retrieving valuable data or resources from the target.

The Way Forward: Key Takeaways for Security Leaders

Recognize and Understand Hybrid Threats: Security leaders must educate themselves about the evolving cyber threat landscape, particularly the emergence of hybrid threats like the Thoughtnet. This understanding is crucial for developing comprehensive cybersecurity strategies that address attacks’ technical and psychological dimensions.

Prioritize Deception and Automation Defenses: Invest in tools and training to detect and counteract Thoughtnets’ deception and automation tactics. This includes monitoring fake accounts and automated bots and implementing measures to protect the integrity of online communications and brand reputation.

Adopt a Holistic Security Approach: Integrate traditional cybersecurity measures with strategies to combat narrative attacks. This holistic approach should involve cross-departmental collaboration, including IT, marketing, and communications teams, to safeguard the organization against technical breaches and narrative attack campaigns.

Leverage AI for Defense: Invest in AI-powered cybersecurity tools that detect and mitigate AI-enhanced threats. These tools can help identify and respond effectively to sophisticated narrative attack and automated attacks, ensuring the organization stays ahead of potential threats.

Promote Digital Literacy and Trust: Foster a culture of digital literacy within the organization by educating employees and stakeholders about the dangers of narrative attack and the importance of verifying information. Building and maintaining trust in the organization’s communications is vital to countering the impact of narrative attacks and preserving the company’s reputation.

To learn more about how Blackbird.AI can help you with election integrity, book a demo.

Rennie Westcott • Senior Intelligence Analyst

Rennie Westcott is a Senior Intelligence Analyst at Blackbird.AI - researching & uncovering disinformation networks and state-backed information campaigns for public sector and private enterprise clients. Rennie specializes in deep & dark web investigations, and has deep experience investigating chat and forum sites for foreign malign influence campaigns, propaganda, and fraud.

Rennie Westcott is a Senior Intelligence Analyst at Blackbird.AI - researching & uncovering disinformation networks and state-backed information campaigns for public sector and private enterprise clients. Rennie specializes in deep & dark web investigations, and has deep experience investigating chat and forum sites for foreign malign influence campaigns, propaganda, and fraud.

Need help protecting your organization?

Book a demo today to learn more about Blackbird.AI.