The Echo Chamber of Influence: How Social Media and AI are Redefining Misinformation and Disinformation

AI and social media have enabled the proliferation of curated personas, each catering to a specific audience and each capable of manipulating business, culture, and politics.

Wasim Khaled

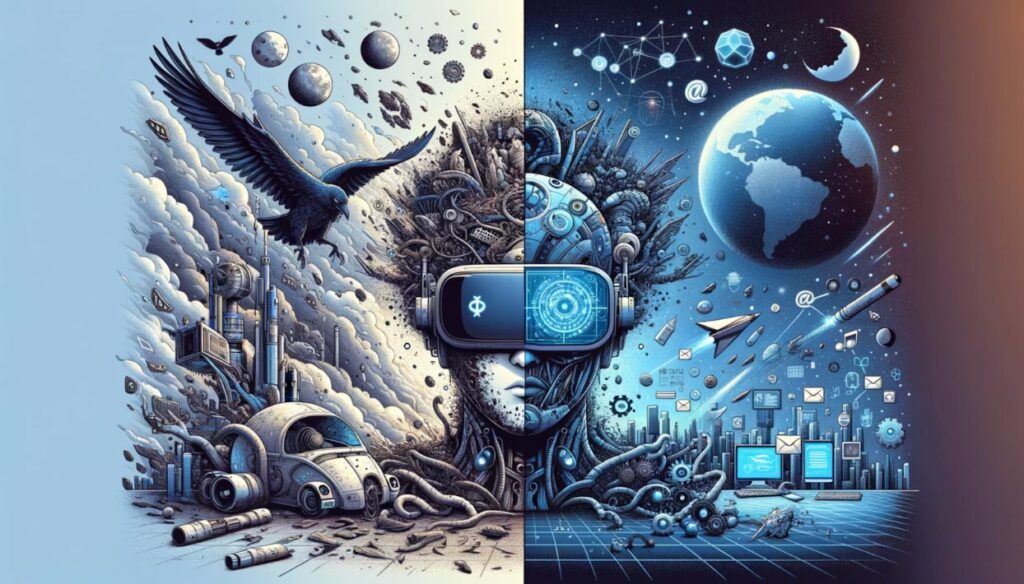

The lines between reality and virtual representations are becoming increasingly blurred. Once seen as authentic individuals sharing their lives online, social media influencers have evolved into carefully curated digital avatars shaped by the audiences they attract and the algorithms that govern their platforms.

This phenomenon is not limited to social media personalities but extends to various industries, from entertainment to politics. As generative AI technologies advance, the concept of digital avatars is taking on a new level of realism, sparking discussions about the implications of this blurring of reality and, perhaps more interesting, our eagerness and desire to let these avatars substitute for their human predecessors.

THE INFLUENCER-AUDIENCE ECHO CHAMBER

It’s clear today that social media influencers tend to become influenced and shaped by the audiences they initially attract, leading to a self-reinforcing echo chamber and an alignment of values on their platforms of choice. As an influencer’s following grows, their behavior and beliefs align more closely with the desires and worldviews of their core audience on the platform, whether that is what the human behind the avatar truly believes in the offline world.

Influential figures have cultivated devoted, nearly cult-like followings on social media by constructing carefully crafted digital personas. These personas often align with the desires and beliefs of their core audience, creating a powerful echo chamber that amplifies their influence and authority. Some of these personalities, particularly in the tech and political spheres, have leveraged their massive online presence to shape public opinion, drive trends, and even challenge traditional power structures. Their followers hang on to their every word, often defending them fervently, spreading their messages far and wide, and attacking critics.

This phenomenon transcends political or inflammatory topics and can occur within any niche, from health and wellness to music and gaming. Influencers, by their popularity and engagement metrics, become shackled to the audience they’ve cultivated, morphing into a concentrated representation of their collective desires. In optimizing their persona around such engagement, behaviors can also be shaped by appeasing the algorithm of a particular platform where influencers lean into behaviors and topics that the algorithm rewards, which in turn is like a slot machine serving up several addictive vices in the form of ad monetization, validation, and other dopamine hits that are as addictive as they are measurable. Often, these tendencies amplify misinformation and disinformation that impact millions of people but drive up usage and follower bases.

THE RISE OF DIGITAL AVATARS

Beyond the influencer-audience dynamic, digital avatars – the online personas we all represent to the world across various platforms are more “real” than our core beliefs one may realize. One’s social media persona may be a provocative or a “dubious” version, while another could showcase an idealized personal depiction. Facebook, for example, calls for a more family-friendly persona, and LinkedIn is tailored for professional advancement.

These digital avatars of ourselves do not necessarily reflect our true, authentic selves but rather carefully constructed personas designed to appeal to specific audiences or contexts. Moreover, the algorithms that govern these platforms further shape our online behavior, incentivizing content and actions that drive engagement and revenue.

We have become accustomed to accepting these digital avatars at face value, even when we know they do not represent the “real” person behind the account. This acceptance has paved the way for more advanced and realistic digital avatars powered by generative AI technologies.

THE ACCEPTANCE OF AI-GENERATED AVATARS

Valer Muzhchyna, a 35-year-old chatbot with a photorealistic avatar, has been promoted by the Belarusian political opposition as a candidate for upcoming elections. The rationale behind this move is that an AI candidate cannot be imprisoned or silenced, allowing the opposition to voice their platform and garner international attention.

While Valer Muzhchyna may not be on the official ballot, his existence as a digital avatar candidate highlights such entities’ growing acceptance and perceived credibility. It also raises questions about the potential implications of AI-generated candidates or public figures regarding their influence and challenges to traditional democratic processes.

A high-profile example is the growing concern among Hollywood workers regarding the normalization of synthetic personalities, AI scans, and digital replicas in the film industry. Many actors have been asked to participate in scans on set, often needing clear explanations of how the footage will be used. The recent contract negotiations between SAG-AFTRA and AMPTP have highlighted the potential for actors’ work to be replaced by digital replicas, causing widespread confusion and concern. While some actors describe standard VFX imaging, others fear their scans could be used to train AI programs and develop full-body replicas without proper compensation. As AI technology advances, experts emphasize the need for clear communication, ethical practices, and safeguards to protect actors’ rights in the rapidly changing landscape of the entertainment industry.

Creators are getting in on the act, too. Kaitlyn Siragusa, a 29-year-old OnlyFans star known as Amouranth, has launched an AI chatbot clone of herself that allows fans to interact with a virtual version of her for a fee, with the basic service costing $1 per minute and an additional $36 per hour for voice messages. This follows a similar launch by Snapchat influencer Caryn Marjorie, with both chatbots developed by ForeverCompanion AI. While the bots were initially intended to provide companionship, there have been concerns about their potential misuse and impact on users’ mental health, leading to the implementation of features to detect and address problematic behavior.

THE PATH FORWARD

Decision-makers must maintain critical thinking and smart policies regarding online authenticity. While digital avatars and AI-generated representations may have their place in certain contexts, it is essential to cultivate a discerning eye and a healthy skepticism toward overly curated or potentially manipulative personas.

Here’s what this means for organizations and decision-makers:

- The erosion of authenticity: As we become more accustomed to interacting with digital avatars, the concept of authenticity and genuine human connection may erode. As we increasingly encounter carefully curated personas and AI-generated representations, we risk losing touch with what it means to engage with real, multi-dimensional individuals.

- The amplification of echo chambers: When combined with AI-generated avatars’ potential reach and persuasiveness, the influencer-audience echo chamber dynamic could further entrenchment of polarized worldviews and disseminate misinformation or harmful ideologies.

- Ethical and legal challenges: Using AI-generated avatars in various contexts, from entertainment to politics, raises ethical and legal questions. Consent, privacy, and the potential for exploitation or manipulation must be carefully considered and addressed through appropriate regulations and guidelines.

- The blurring of reality and fiction: With the advent of highly realistic digital avatars, discerning truth from fiction may become increasingly challenging. This could have far-reaching implications for consuming and interpreting information, making decisions, and navigating the digital landscape.

- Significant implications for democracy and governance: The potential use of AI-generated candidates or public figures raises concerns about the integrity of democratic processes and the ability of citizens to make informed decisions based on authentic representations of candidates and their platforms.

Leaders must engage in thoughtful discussions and develop appropriate regulatory frameworks to address this phenomenon’s ethical, legal, and societal implications. By doing so, we can harness the potential benefits of these technologies while safeguarding against their misuse and ensuring the preservation of authenticity, trust, and democratic values.

About Blackbird.AI

BLACKBIRD.AI protects organizations from narrative attacks created by misinformation and disinformation that cause financial and reputational harm. Our AI-driven Narrative Intelligence Platform – identifies key narratives that impact your organization/industry, the influence behind them, the networks they touch, the anomalous behavior that scales them, and the cohorts and communities that connect them. This information enables organizations to proactively understand narrative threats as they scale and become harmful for better strategic decision-making. A diverse team of AI experts, threat intelligence analysts, and national security professionals founded Blackbird.AI to defend information integrity and fight a new class of narrative threats. Learn more at Blackbird.AI.